|

Email: xsy9915[at]gmail.com/shiyao.xu[at]unitn.it Github CV Google Scholar Twitter Linkedin 知乎

I am Shiyao Xu (徐诗瑶), a 2nd I obtain my M.Sc. degree from Peking University, China, 2023, supervised by Prof. Zhouhui Lian, and bachelor at Dalian University of Technology, China, 2020. I'm actively looking for internship opportunities for 2026 summer! (or anytime, just an internship🥹!) Please feel free to reach me out!!! |

|

|

|

|

|

|

|

Shiyao Xu, Benedetta Liberatori, Gül Varol, Paolo Rota 3DV 2026 [Project] [PDF] [Code] [Dataset] We propose dense motion captioning task together with a complex motion dataset CompMo includes 60,000 motion sequences, each composed of multiple actions ranging from at least 2~10, and a model DEMO for that task. |

|

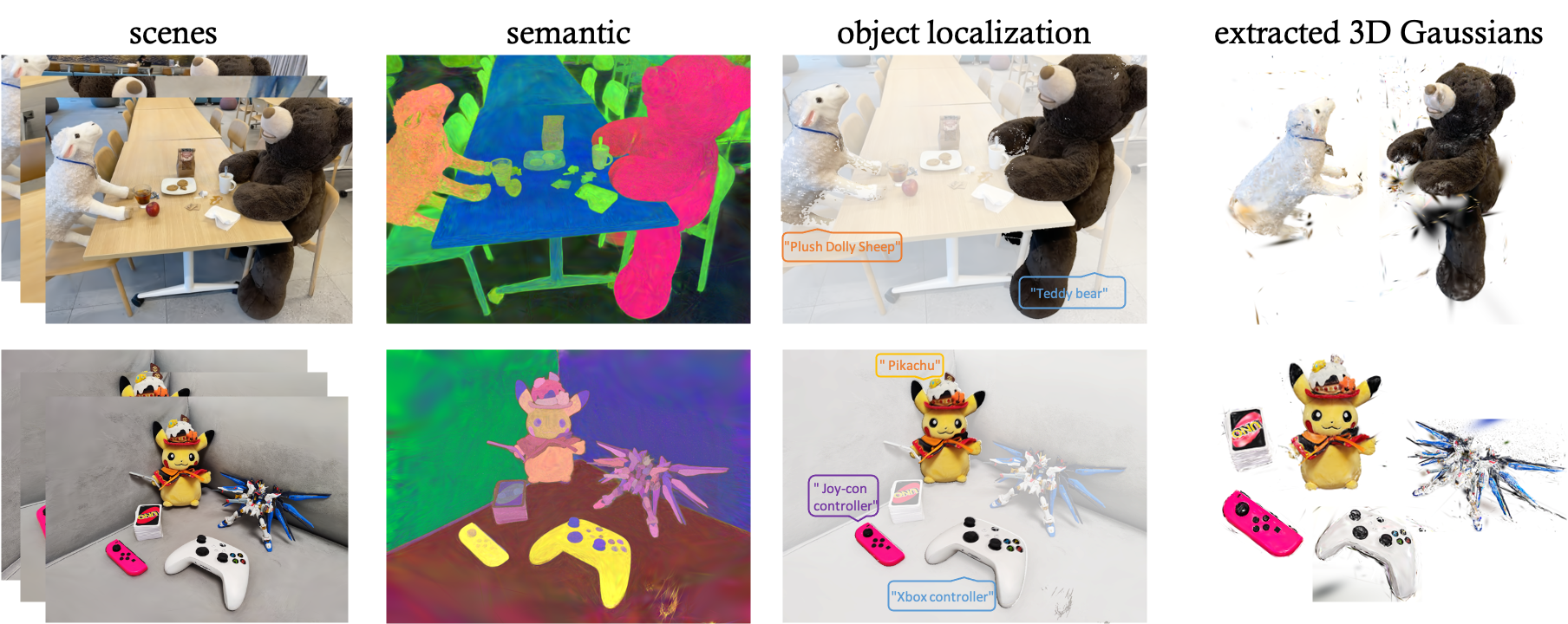

Shiyao Xu, Junlin Han, Jie Yang. got rejected by some conference🥲it's ok, both life and research will encounter some rejections🥹. you can see it below. [PDF] We propose FD-3DGS to distill the semantic information into 3D Gaussians and directly manipulate 3D Gaussians using language. |

|

|

Shiyao Xu, Lingzhi Li, Li Shen, Zhouhui Lian CVPRW 2023, CVPR Workshop on Generative Models for Computer Vision [Project] [PDF] [Code](tbc) [Poster] We propose a more efficient method, DeSRF, to stylize the radiance field, which also transfers style information to the geometry according to the input style. |

|

|

Shiyao Xu, Lingzhi Li, Li Shen, Yifang Men, Zhouhui Lian SIGGRAPH ASIA 2022 Technical Communication [Project](tbc) [PDF] [DOI] [Code] We propose a novel 3D generative model to translate a real-world face image into its corresponding 3D avatar with only a single style example provided. Our model is 3D-aware in sense and also able to do attribute editing, such as smile, age, etc directly in the 3D domain. |

|

|

Guo Pu, Shiyao Xu, Xixin Cao, Zhouhui Lian [PDF] finally available on arxiv... but this is my first project;-) We propose an automatically method to transfer the dynamic texture of a given video to a still image. Abstract

|

|

|

|

2024.07 - 2024.09: 3D Algorithm Engineer at Math Magic. |

|

2023.07 - 2024.05: Research Scientist at Cybever Inc., Mountain View (remotely). |

|

2021.08 - 2023.07: Research Intern in DAMO Academy, Alibaba Group. Mentored by Lingzhi Li, Supervised by Dr. Li Shen. |

|

2021.07 - 2021.08: Machine Learning Intern at Apple Inc., Beijing, China. |

|

|

|

2024.09 - : ELLIS PhD student at University of Trento, Itlay.

|

|

|

2020.09 - 2023.06: M.Sc. in Wangxuan Institute of Computer Techonology(WICT) at Peking University, China.

|

|

2016.09 - 2020.06: B.Eng. in School of Software at Dalian University of Technology, China. Major in Big Data and Machine Learning. |

|

|

|

|

|

|

|

Build the bridge between 2D and 3D world. Do some cool research!😎 Last modified: 05/12/2025 |